Performance and costs

Hardware Performance¶

When evaluating hardware performance, several key factors come into play. The CPU, or central processing unit, is often the heart of the system, and its performance can be gauged by its clock speed, measured in gigahertz (GHz), the number of cores and threads it possesses, its instructions per cycle (IPC), and the size and speed of its cache. These elements collectively determine how quickly and efficiently a CPU can process tasks.

Memory performance is another critical aspect, with bandwidth and latency being the primary metrics. Bandwidth refers to the amount of data that can be transferred to and from memory per second, while latency measures the delay before a transfer begins following an instruction. High bandwidth and low latency are desirable for optimal performance.

In addition to the CPU and memory, other hardware accelerators such as integrated media engines, GPUs (graphics processing units), and NPUs (neural processing units) play significant roles in enhancing performance, especially for specialized tasks like video processing, gaming, and machine learning.

Computing power is often quantified in terms of floating-point operations per second (FLOPS), which indicates the system’s ability to handle complex mathematical calculations. Storage performance, on the other hand, is evaluated based on capacity, speed, and reliability. Faster storage solutions can significantly reduce load times and improve overall system responsiveness.

I/O devices, which include peripherals like keyboards, mice, and external drives, are assessed based on their speed, latency, compatibility, and user experience. Efficient I/O performance ensures smooth and seamless interaction with the system.

Thermal performance, scalability, energy consumption, design, size, weight, and battery life are also important considerations. Effective thermal management prevents overheating and maintains system stability, while scalability ensures that the system can grow with increasing demands. Energy-efficient designs reduce operational costs and environmental impact, and compact, lightweight systems with long battery life are particularly valuable for mobile and portable applications.

Ultimately, the importance of each of these factors depends on the specific workflow and use case. For instance, a gaming setup might prioritize GPU performance and thermal management, while a data center might focus on scalability and energy efficiency. Understanding these various elements and how they interact is crucial for optimizing hardware performance to meet the needs of any given task.

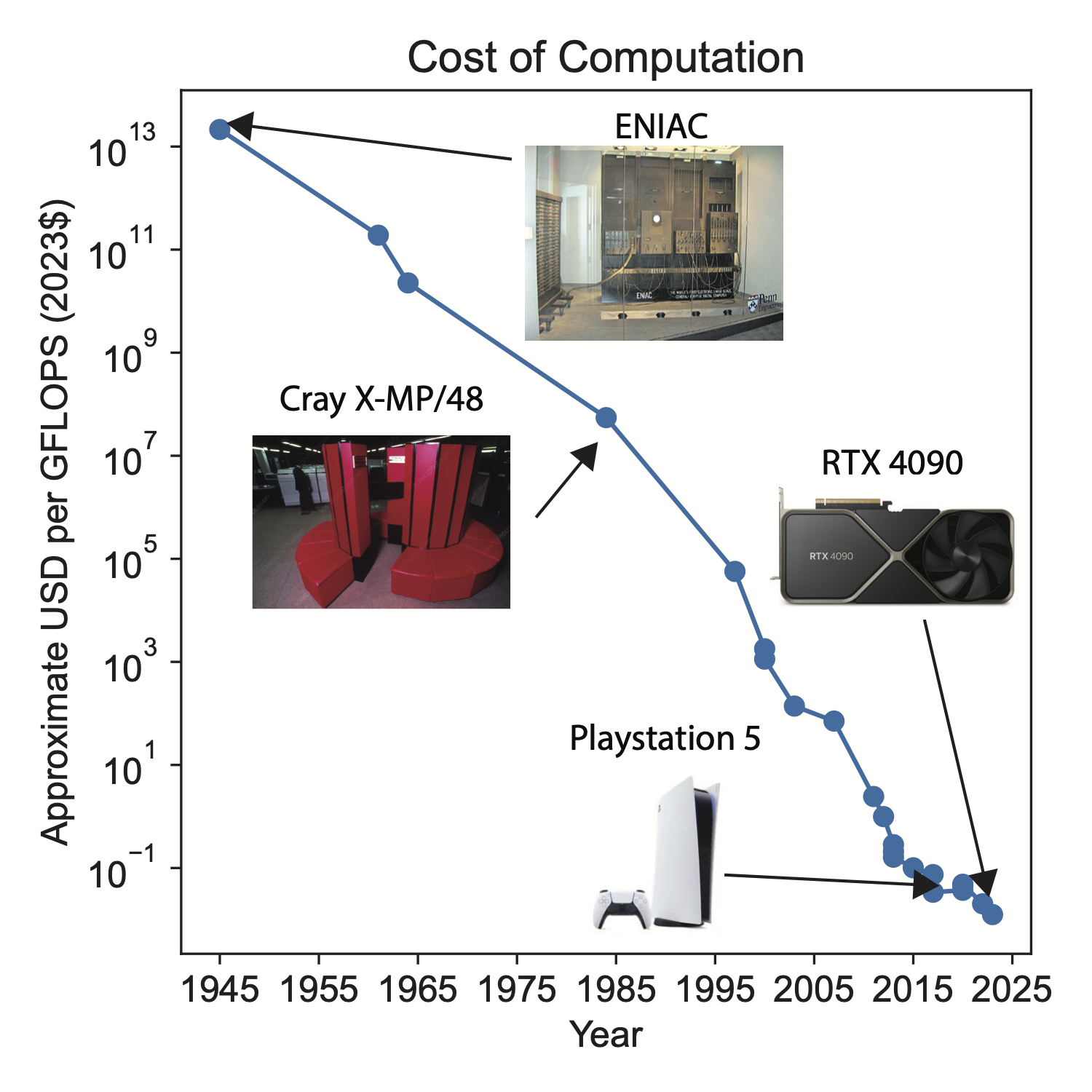

Cost of Computation¶

The cost of computation is decreasing so the computational modelling is becoming increasingly cost-effective compared to experiments.

Moore’s law, which observes that the number of transistors in an integrated circuit doubles approximately every two years, has been a guiding principle in the semiconductor industry. This trend, while not grounded in fundamental physics, has accurately projected the rapid advancement of computational power over the decades. As a result, the cost of computation has significantly decreased over time, making computational modeling an increasingly cost-effective alternative to traditional experimental methods.

Despite these advancements, we are now approaching the physical limits of transistor miniaturization. The costs associated with further reducing transistor size and improving fabrication techniques are growing exponentially. Additionally, other expenses such as electricity, cooling, manpower, and space continue to be significant factors. Thus, while computational costs have dropped, the overall cost landscape remains complex and multifaceted.